Dr. Noel Sharkey and Dr. Duncan MacIntosh—two of the world’s leading thinkers on the ethics, role and implications of autonomous weapons—faced off on March 21 to debate “War in the Age of Intelligent Machines.” The highlight of King’s 2018 Public Lecture Series, Automatons! From Ovid to AI, the event attracted a standing-room only audience of 200 students, faculty, military and community members to the Scotiabank Theatre at Saint Mary’s University.

While artificial intelligence is already used in modern weaponry, such as drones, humans still guide the action remotely. Autonomous weapons, by contrast, will be self-regulating, capable of making tactical decisions on their own, without human guidance. For both Sharkey and MacIntosh, this rapidly approaching reality raises serious moral and ethical issues for the future conduct of warfare.

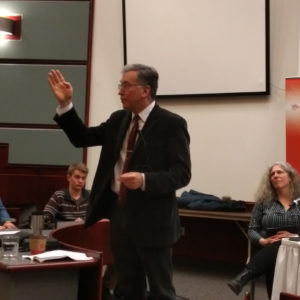

Prof. Duncan MacIntosh

MacIntosh, a professor of philosophy at Dalhousie University and an advisor to several U.S. think-tanks studying the topic, opened the debate by acknowledging that Sharkey is concerned that “killer robots” will be deployed prematurely without adequate regulation. By contrast, MacIntosh believes the carefully considered use of such weapons can offer “subtle, plausible uses and, in some situations, can support morally superior outcomes.”

“We need to understand what their design gives them the capacity to do and then only deploy them on missions where that would be a good competence,” he explained, such as patrolling a demilitarized zone, in aerial combat or in performing dangerous tasks, such as working in environmentally contaminated areas or where there’s danger of physical injury to soldiers.

MacIntosh maintains that robotic weapons, strategically and ethically deployed, could save the lives of both soldiers and civilians, because you can make them as precise as the design allows. “There are times when you need a vastly more intelligent machine, however, such as when combatants are surrendering, or you’re faced with child soldiers or civilians,” he explained. “If they’re not going to have that effect, don’t use them.”

As autonomous weapons are already being built, MacIntosh argued that, “It falls to people like Dr. Sharkey and me to force conversations among politicians, the military, diplomats, academics and the manufacturers to think through the challenges and consequences.”

Prof. Noel Sharkey

Sharkey, a professor of artificial intelligence and robotics at the University of Sheffield, chairs the International Committee for Robot Arms Control, which is pressing for a total ban on autonomous weapons. For him, “talk is wasted.” He argued that current systems of artificial intelligence are simply inadequate to program these weapons to respect the principles of the laws of war and international humanitarian law.

“The concept of precaution in war says that if you’re not sure someone is a civilian or a combatant, assume [they’re] a civilian,” said Sharkey. “But an algorithm can’t do that; a machine can’t exercise judgement, especially when faced with all the unexpected situations that arise on a battlefield.”

“Humans can be held accountable for their actions. A robot cannot; it runs according to its programming,” Sharkey argued. These risks will likely be magnified by an overreliance on computers systems and the potential for software malfunction and degradation, computing errors, enemy infiltration of the industrial supply chain, enemy cyber attacks and human error, among other issues.

But what concerned Sharkey the most was the potential of robots to accelerate the pace and level of destruction of warfare itself. The U.S., Russia and China are already running aggressive weapons development programs, while Turkey recently declared its intention to add autonomous weapons to its arsenal. For Sharkey, it’s only a matter of time before “other countries develop cheap copies.”

“When both sides have robots in the field, it won’t be the end of war,” Sharkey said. “When my robots beat your robots, you’re still going to fight.”

Two lectures remain in the Automatons! series:

March 28: Unmaking People: The Politics of Negation from Frankenstein to Westworld: A lecture marking the 200th anniversary of the Mary Shelley classic with Despina Kakoudaki, American University of Washington, and author of Anatomy of a Robot: Literature, Cinema and the Cultural Work of Artificial People.

April 4: Living Artificially With King’s alumna and University of Pennsylvania professor, Stephanie Dick, BA(Hons)’07. Author of Of Models and Machines.